Recent Posts

Big and Small, They Mined Them All (MHA Presidential Address, 2021)

In June 2021, the Mining History Association held its annual meeting in a virtual format for the first time, due to COVID-19. As incoming President of the organization, after ceremoniously “accepting” the official mining pick of office, I delivered the traditional presidential address via Zoom.

The talk, titled “Big and Small, They Mined Them All: Thinking About Scale in Mining History,” was really my chance to talk about the modern Nevada gold mining boom and specifically the Carlin Trend.

read more

Photos and Mining History

Note: This post ran as a Presidential Column in the Spring 2022 issue of the Mining History News.

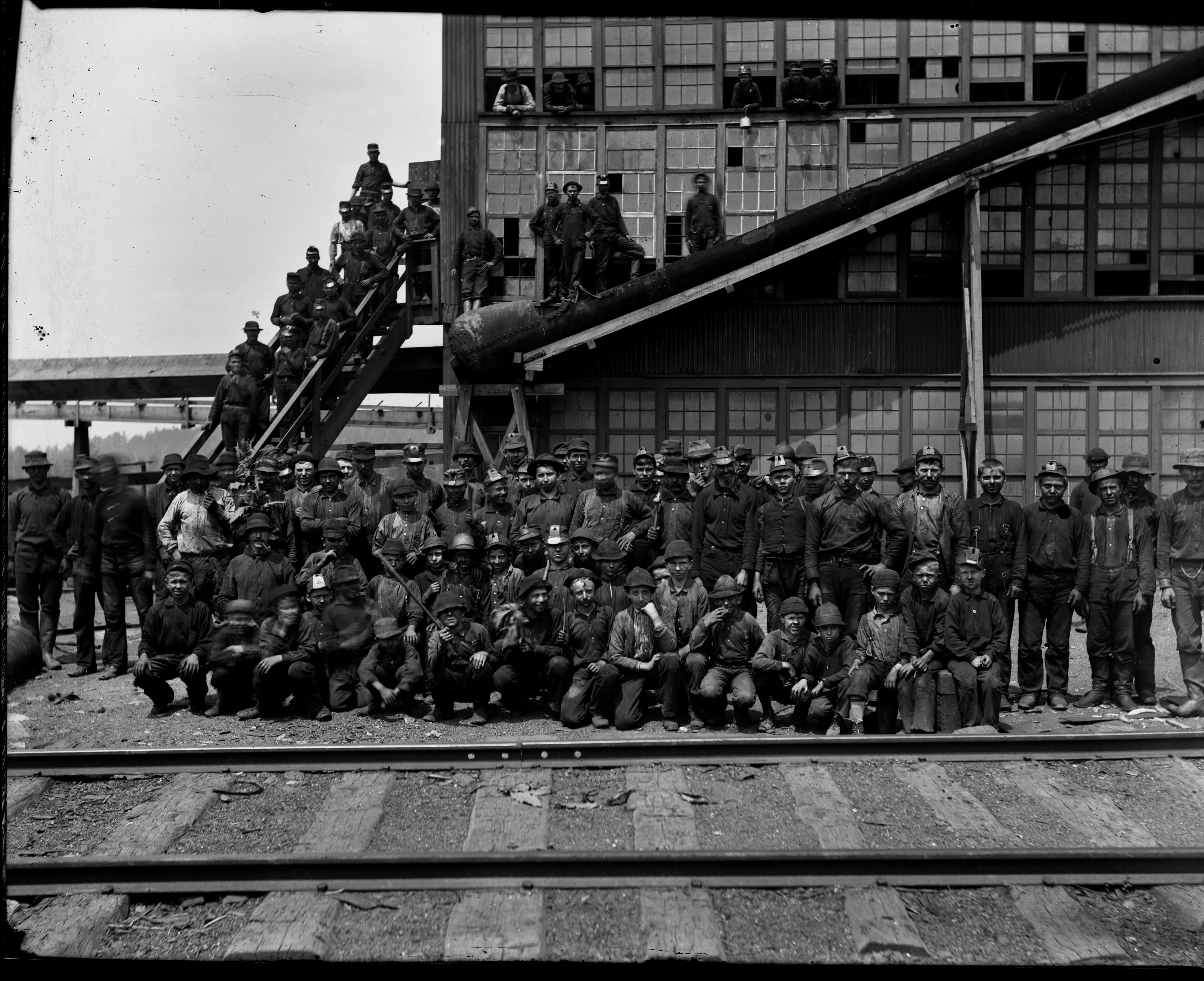

After reading the last newsletter, MHA member Hans Muessig wrote me with a suggestion for this column. “Historic photographs are a critical and I think underutilized resource in studying the past,” he argued, and I couldn’t agree more!

Historians are generally trained to pay closest attention to words and texts as sources, a preference that dates to the earliest years of the field’s professionalization in the 19th century.

read more

The Paper Record Behind Mining History

Note: This post ran as a Presidential Column in the Winter 2021/22 issue of the Mining History News.

I have a confession: I’m a historian, and yet I haven’t set foot in an archive since 2019 due to COVID-19. I’m getting antsy– I’ll look for digitized photos in online repositories, browse electronic versions of the Engineering and Mining Journal and Mining and Scientific Press, check out high-resolution historic newspapers at the Chronicling America site, and even peek at census images, but it’s not the same.

read more